Analyzing/fighting the algorithm

In the past 5 years, numerous scholars have published on algorithms. In the field of social sciences and the humanities, their work tries to evaluate to what extent and how the algorithm favors contents considered problematic: conspiratorial contents, homophobic contents, invisibilization of minorities, discrimination of queer vocabulary, etc. Other texts, more cautious, try to find out if the YouTube algorithm really produces biased results or favors specific videos (Kirdemir et al. 2021). Most of this research is driven by a moral or ethical obligation, which is reflected in the recurrent term “fairness”, and which can culminate in pleas for more civil or ethical responsibility and accountability. To put it shortly, most of the research on the subject considers the algorithm responsible for the platform’s recommendation process as an obstacle to become aware of or to overcome in order to achieve more transparency.

A twofold method

My recent platform-related research dedicated to amateur videos by the so called Yellow vests movement (Hamers 2022) used YouTube as a reservoir of amateur footage for the constitution of a research corpus. Concretely, this research combined three working methods: keyword search using tags associated with the movement, subscription to several YouTube accounts active in the framework of the protests, and consultation of YouTube pages administrated by alternative news broadcasters that were particularly active in the context of the protests. This threefold method similar to what Ulrike Lune Riboni called “reasoned wandering” (“déambulation raisonnée”; Riboni 2016), produced a collection of about 780 different videos. I then submitted these videos to different hermeneutic and epistemic operations, which delivered an initial overview of several recurrent visual issues or motives. In short: First, my research was entirely determined by the YouTube algorithm. Then, once the videos had been extracted from the platform, I started to work on the raw material, free from the effects of the algorithm.

An epistemic media

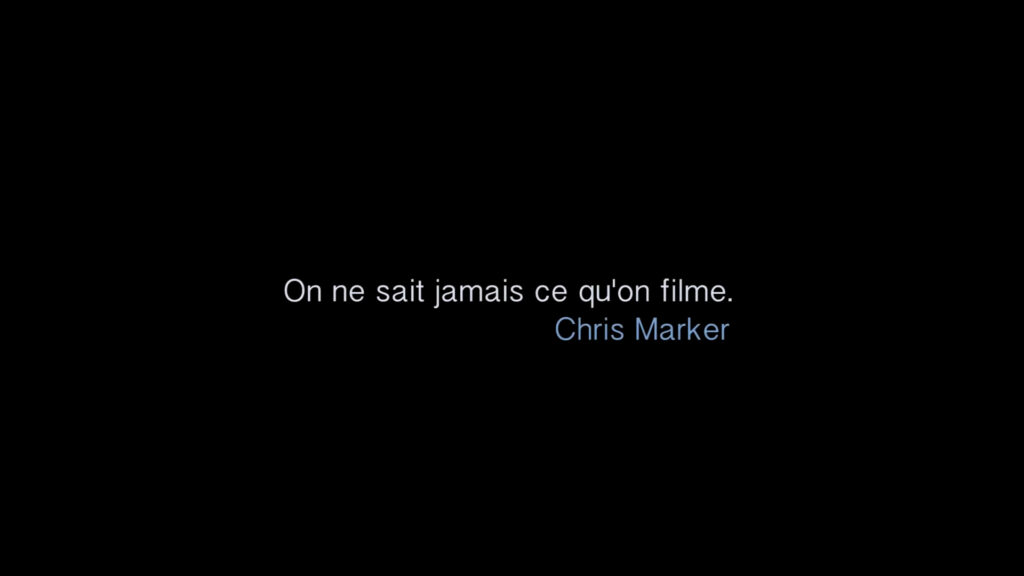

At the origins of my research on the Yellow vests videos, the intention was to understand how this social movement could constitute a source of alternative media representations. However, my research gradually switched to a more aesthetic question that concerned the representation of vision and visibility and of the act of filming in the context of street riots. This evolution has been deeply shaped by two particular moments. 1/ During my searching and scrolling exploration of YouTube, a very short video, shot in 2016 (2 years before the movement emerged) appeared in the platform’s suggestions. This video by Matthieu Bareyre and Thibaut Dufait, entitled On ne sait jamais ce qu’on filme (“You never know what you are filming”), showed how members of the CRS (“Compagnies républicaines de sécurité”, corps of the French national police mainly involved in riot control) hit and punched two handcuffed demonstrators who were offering no resistance.

The title of this 2 minutes video refers to the fact that the two directors did not see the punches while shooting. But it is also a deliberate quote of a famous sentence in Chris Marker’s Le fond de l’air est rouge (A Grin Without a Cat, 1977), commenting on the evolution over time of the meaning of archival footage. As such, it establishes a link between my research and Marker’s film which is first of all a film about the circulation of visual motives, especially of the gestures of protest.

More concretely: it linked pictures of the protesters filming police forces by holding a smartphone at arm’s length, as one of the recurring subjects of the “visual repertoire” (Bertho 2011) of clashes between Yellow vests and law enforcement authorities, to a visual lineage of older struggles.

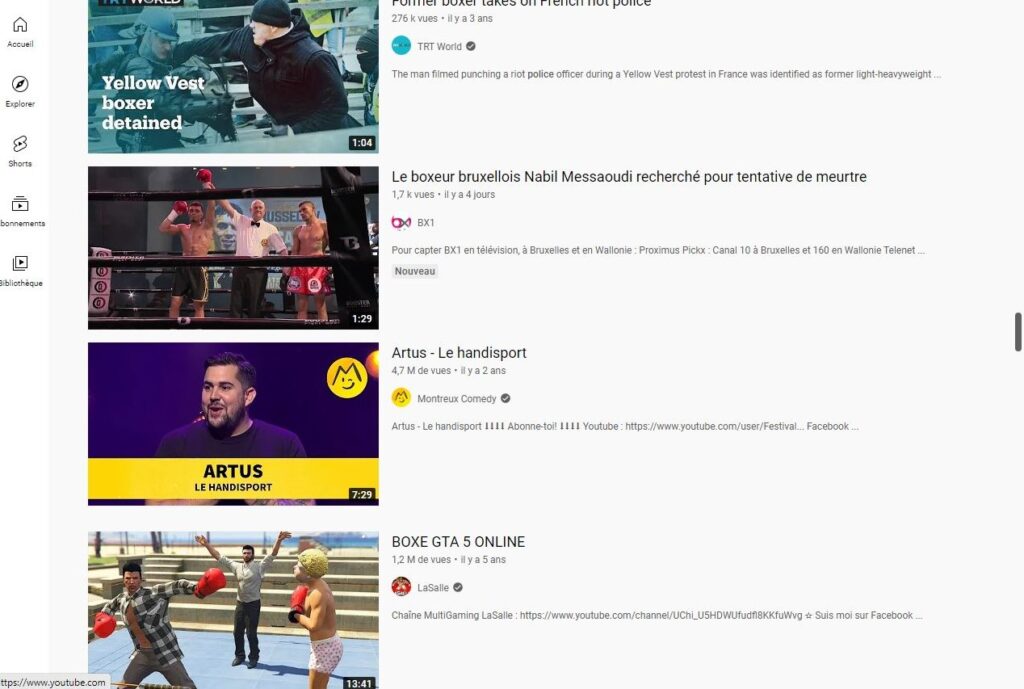

2/ While looking for different videos of the French boxing champ Christophe Dettinger pushing back a squadron of CRS during a Yellow vests demonstration (Fig. 16-17), the search with the key words “boxing” and “police” generated without surprise different results not related to the Yellow vests. The famous video of Dettinger indeed led to different video game excerpts, but also to some scenes of Chaplin shortfilms (Fig. 18-19-20-21). The recommendation algorithm produced an edit, or a montage, a way of editing the history of moving images.

Two observations must be drawn from this. Firstly, in absolute terms, one does not need the platform to recommend the short film by Bareyre and Dufait, or the Chaplin excerpts in order for the circulation of visual motives to be revealed. But it is indeed thanks to the platform’s serendipity that my research took a new direction. Secondly, as a corollary, the algorithm has not been an obstacle but an adjuvant of my research. Rather than reflecting on what kind of epistemic practices the platform hinders and how to bypass its algorithmic selection processes, this previous research suggests that YouTube can be considered as an epistemic media. That’s why, against the possible de-opacification towards more transparency and predictability, one should ask how the outputs of the device itself can be handled as symptoms of a system driven by algorithms yet productive of knowledge.

Heuristics of montage

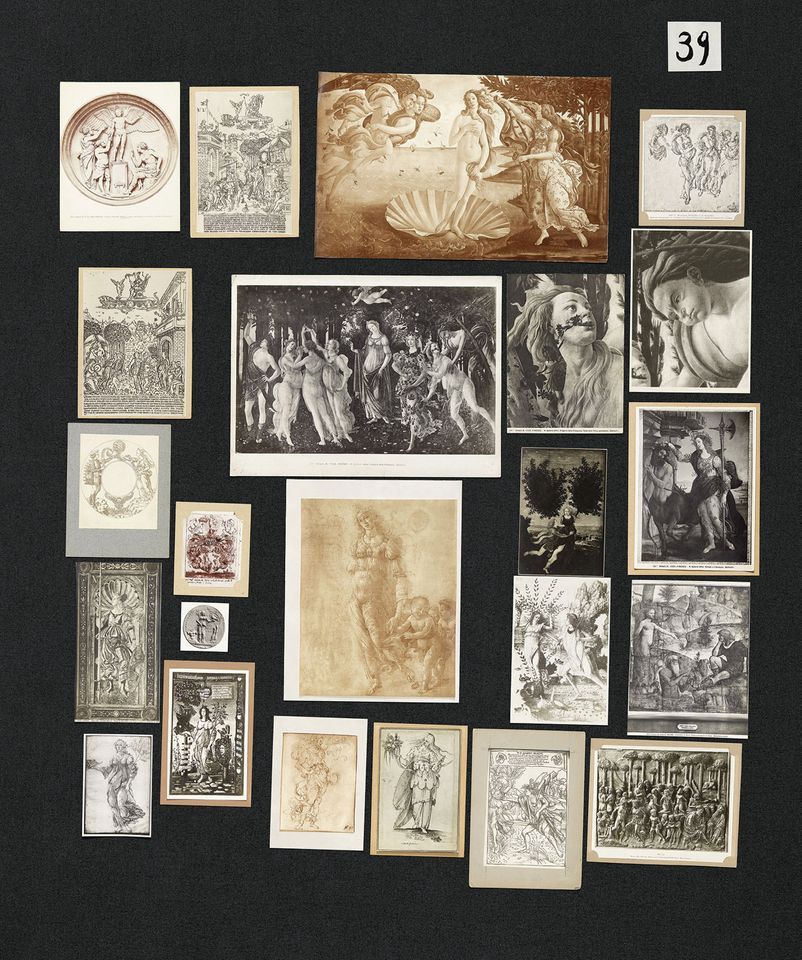

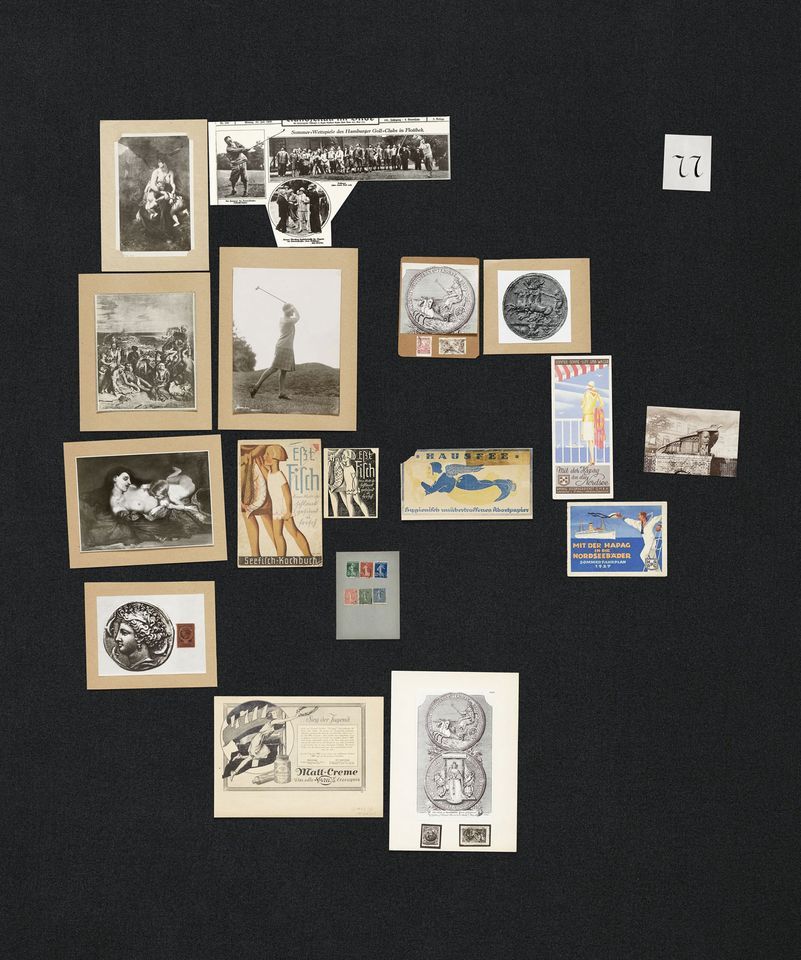

From 1921 to 1929, art historian Aby Warburg produced one of his masterpieces, the atlas Mnemosyne, in the form of several tables. These tables contained a set of reproductions of works of art, fragments, but also contemporary documents such as advertising clippings (Fig. 22-23). His famous work has fundamentally revolutionized the study of visual representations because it has opened art history to the question of the circulation of motifs and figures, to the survival, the disseminations and the moments of rupture, in order to elaborate an iconology of the intervals. In his trilogy L’oeil de l’histoire philosopher Georges Didi-Huberman has drawn from Warburg’s work a thought of the heuristics of montage specific to the form of the atlas (Didi-Huberman 2009/2010/2011). Their main ingredients are: the wandering of a reader-spectator, the revealing association of heterogeneous pictures, the identification of formal survivals (“Nachleben”) and the perpetual reconfiguration.

In 2012 Georges Didi-Huberman and Arno Gisinger presented their multimedia installation Histoires de fantômes pour grandes personnes at the Fresnoy in Lille. The second room of the exhibition housed the main piece of the work, Mnemosyne 42 (Fig. 24). For this part of the exhibition, the creators had arranged on the floor of the main hall, about 1000 m2 wide, 38 screens of varying sizes, each showing a different scene from fiction films, non-fictional images (still or moving), and photographic reproductions of fragments of paintings, sculptures and engravings. The spectator leant over a pit to watch the projections and could walk around the “board”. Through Mnemosyne 42, Didi-Huberman and Gisinger thus enabled the viewer to freely circulate and to make the experience of the visual reconfiguration that was the basis of the Warburgian atlas. Moreover, each sequence looped and had its own duration. As time went by, the visitor witnessed a differential evolution of simultaneities. A more or less long sequence crossed each time other sequences. The work was thus in a state of perpetual reconfiguration, one of the core principles of the Warburgian atlas, proposing an always new set of possible combinations, a perpetual and potentially heuristic re-editing.

Becoming unpredictable

Can the YouTube algorithm and its (un)predictable outputs work as a device of heuristic montage and of open and perpetual reconfiguration? One possible way to trigger such a use or functioning of the platform would maybe first be to provoke unpredictable outputs by becoming an unpredictable user. In this project, transparency would no longer be a goal, as the outputs of an opaque system can become the main tools of a new epistemology. But a bilateral opacity. Of course, we will probably never know how the algorithm really works. But, the other way around, the algorithm never really understands what the user does with the images it recommends. That’s why we can finally add to Marker’s sentence “you never know what you are filming”: “the algorithm never knows what we see”.

References

Bertho, Alain. (2011). Émeutes sur internet: montrer l’indicible?. Journal des anthropologues, 126-127, 435-449. https://journals.openedition.org/jda/5586

Didi-Huberman, Georges. (2009). Quand les images prennent position, Paris, Minuit.

Didi-Huberman, Georges. (2010). Remontages du temps subi, Paris, Minuit.

Didi-Huberman, Georges. (2011). Atlas ou le gai savoir inquiet, Paris, Minuit.

Hamers, Jeremy. (2022). The Act of Filming: Yellow Vests, Smartphones and the Gesturality of Struggle. Imago, 23: Miriam de Rosa, Elio Ugenti (ed.), “Media Processes. Moving Images Across Interface, Aesthetics and Gestural Policies”, 125-140.

Kirdemir, Baris & Kready, Joseph & Mead, Esther & Nihal Hussain, Muhammad & Agarwal, Nitin. (2021). Examining Video Recommandation Bias on YouTube, In: Boratto, L., Faralli, S., Marras, M., Stilo, G. (eds). Advances in Bias and Fairness in Information Retrieval. BIAS 2021. Communications in Computer and Information Science, 1418. Springer, Cham., 106-116, https://doi.org/10.1007/978-3-030-78818-6_102021. https://link.springer.com/chapter/10.1007/978-3-030-78818-6_10

Riboni, Ulrike Lune. (2016). « Juste un peu de vidéo. » La vidéo partagée comme langage vernaculaire de la contestation : Tunisie 2008-2014, PhD thesis, Sciences de l’information et de la communication, Université Paris 8 – Saint-Denis, 2016, p. 95. HAL. archives-ouverts.fr. https://hal.archives-ouvertes.fr/tel-02392337/document